At the beginning there was a chat with @ortelius outside the Namenlos bar at FOSS4G in Bonn. Jef insisted in doing a benchmark of MVT server implementations at the next FOSS4G. With my experience from the FOSS4G WMS benchmarks, where I supported Marco Hugentobler in the QGIS team, I knew how much time such an exercise takes and was rather sceptical.

Two years later I was at a point in the development of t-rex, my own vector tile server, where I needed just this bechmark. I was replacing the built-in webserver with a new asynchronous high-performance webserver, but wanted to measure the performance win of this and other planned improvements. So I decided to implement an MVT benchmark before starting any performance tuning sessions. The dataset I wanted to use was Natural Earth, which is has a decent size for a world wide dataset. A few weeks earlier, Gretchen Peterson blogged about a Natural Earth MVT style she had done for Tegola, Jef’s tile server contestant.

I got Gretchens permission to use it and so I started implementing the same map with t-rex. I gave me 5 days for

benchmarking and refactoring t-rex, but at the end of day one, I had only about 5 of 37 layers finished.

So I postponed my plan for a complete benchmark and used the partially finished tileset for my further work.

My idea of performance tuning was to start a profiling tool on my code and look into the hot spots. But the map

was so slow at the current state that it was clear that some database operations must be the reason. Instead of

starting to profile code, I added a drilldown functionality to t-rex first. This command returns statistics for

all tile levels at a set of given positions.

The returned numbers revealed a very slow layer called ne_10m_ocean, which turned out to be a single multipolygon

covering the whole earth. So step two wasn’t about code tuning, but dividing polygons into pieces which can

make better use of a spatial index. Remembering Paul Ramsey mentioning

ST_Subdivide exactly for this case, I threw some SQL at the PostGIS table:

CREATE TABLE ne_10m_ocean_subdivided AS

SELECT ST_Subdivide(wkb_geometry)::geometry(Polygon,3857) AS wkb_geometry, featurecla, min_zoom

FROM ne_10m_ocean

The query for using the table with t-rex is a bit more complicated:

SELECT ST_Multi(ST_Union(wkb_geometry)) AS wkb_geometry, featurecla, min_zoom

FROM ne_10m_ocean_subdivided

WHERE min_zoom::integer <= !zoom! AND wkb_geometry && !bbox!

GROUP BY featurecla, min_zoom

And the result? The creation of a vector tile went from several seconds far below one second. Lession learned: before tuning anything else, tune your data!

The rest of the week I’ve integrated the shiny new web server into t-rex which ended up (together with other new features like Fake-Mercator support) in a major new release 0.9.

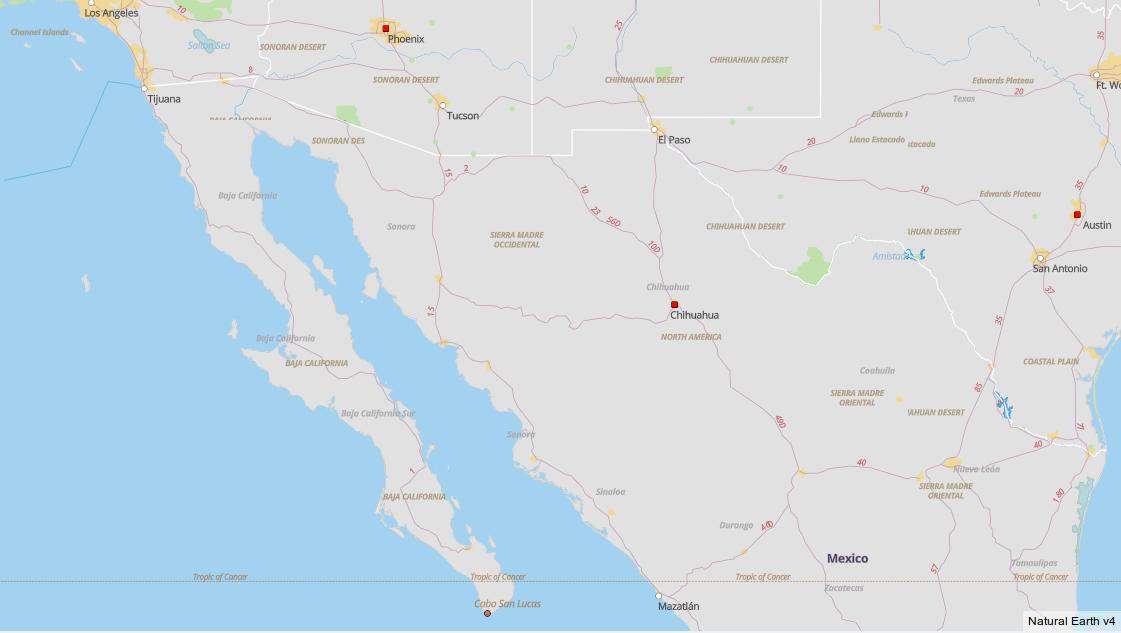

In the meantime, my colleage Hussein finished the Natural Earth map, but I decided to relabel it from a benchmark to a t-rex example project. While completing the map I remembered a simpler map based on Natural Earth data, which we used for some exercises in our vector tile courses. The style needs only 5 vector tile layers and is therefore much easier to implement. I’ve simplified this style from Petr Pridal’s MapBox GL JS offline example a bit, resulting in a map, which still looks quite pretty:

So I had a new base for an MVT benchmark, which was enough motivation to finish this project before FOSS4G Dar es Salaam. An important goal was to take as much work as possible away from other projects implementing this benchmark. So even starting the benchmark database is a single Docker command. During the conference I’ve talked to several developers and power users of other vector tile servers to give them a first hand introduction.

So finally, here is the MVT benchmark, ready for contributions. Have a look at it and let’s revive the benchmark tradition at FOSS4G 2019 in Bucharest!

Pirmin Kalberer (@implgeo)